In this brief Section we introduce the basic concept of Recurrent Neural Networks, emphasizing the kind of learning problems they are designed to solve. Unlike our previous Chapters these problems involve dynamic datasets, that is data that is ordered (often in time), which arise in eveything from financial time series prediction to machine translation and speech recognition.

In the preceeding Chapters we we have dealt entirely with datasets whose members are un-ordered - or 'static' - in nature. In other words, data that is generated in no particular. Visually speaking we can imagine such datasets popping into existence in much the same way the toy regression dataset is shown in the animation below. That is - across the input space of the data, individual points 'pop' into existence largely at random. As you pull the slider below from left to right we show a simple (regression) dataset arising just like this. This is 'static' data - its members arise in no particular order.

On the other hand, many types of data arise sequentially in an ordered fashion, e.g., if the data is generated in time. This kind of data is often referred to as dynamic - as opposed to the 'static' data. Below we animate the same regression dataset shown above, only now we show show it arising sequentially from left to right in a dynamic fashion.

We can still apply our standard machine learning tools to deal withi such ordered or dynamic data, however the fact that it has this additional structure begs the question: can we leverage this structure to do a better job? This is very much akin to our discussion of convolutional networks in the preceeding Chapter, where there we leveraged the local spatial correlation of images / video to improve our ability supervised learning abilities with such data. Here - by analogy - we look at how to codify and leverage order to analagously enhance our learning schemes for ordered datasets.

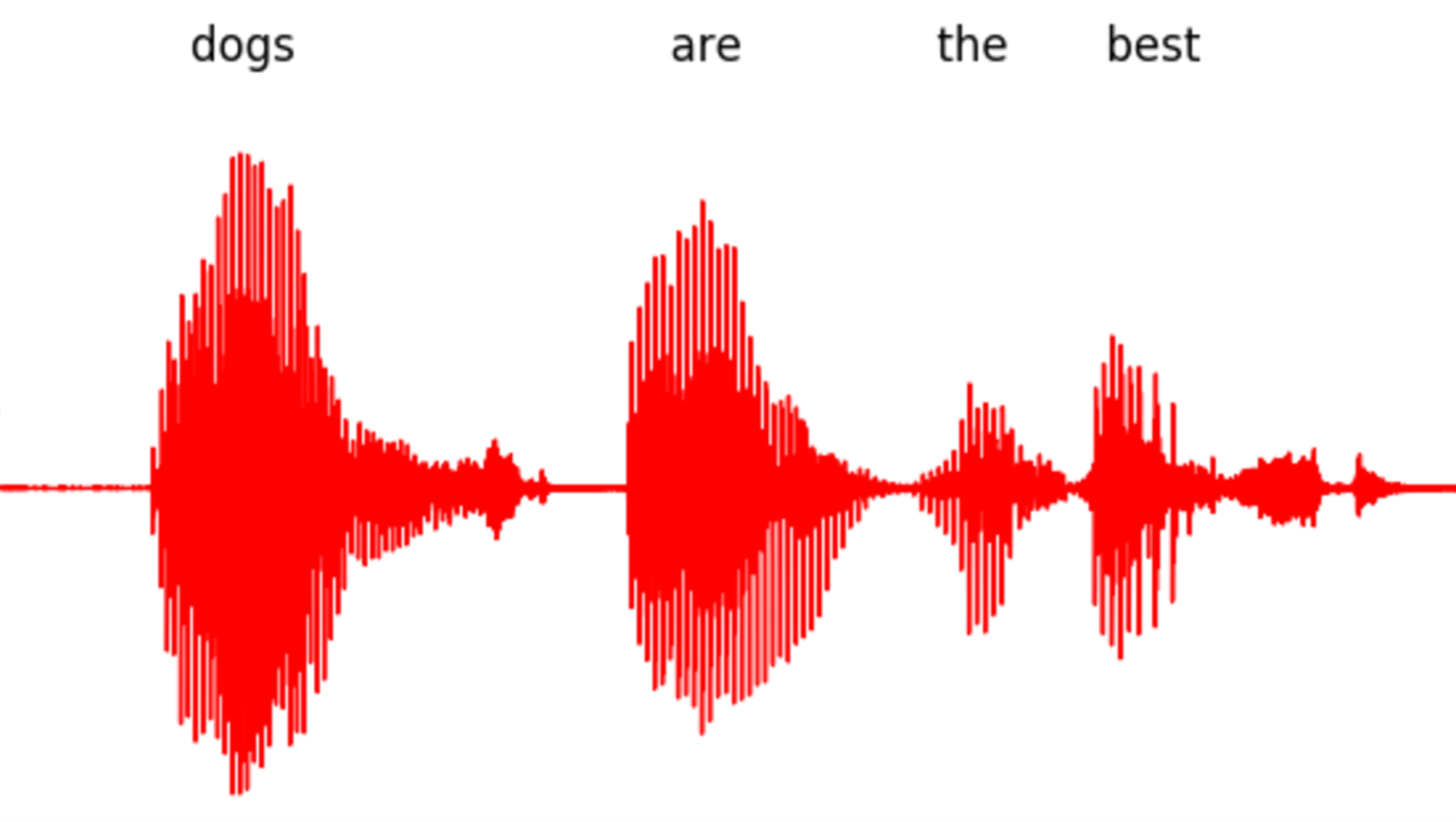

Many popular learning tasks naturally fall into this category. These include various time series prediction tasks, adaptive filtering, speech recognition, and machine translation tasks.

This Chapter details how we can extend the learning framework detailed in previous Chapters to better deal with ordered (or dynamic) datasets, with the most universally applicable tool being Recurrent Neural Networks (or RNNs for short). We begin by discussing dynamic systems in the next two Sections that follow. Much in the same way one learns the equation of a line prior learning about linear regression, these Sections provide an overview of the kinds of mathematical models employed when learning. The Sections that follow then detail various learning procedures employing dynamic systems, including autoregressive processes and the pinnacle RNN framework.