In the previous Section we described in words and pictures what the derivative at a point is - in this Section we get more formal and describe these ideas mathematically and programatically. We will start our discussion with functions that take in only one input like the familiar sinusoidal function

\begin{equation} g(w) = \text{sin}(w) \end{equation}which takes in the single input $w$ (we generalize afterwards to functions that take in more than one input).

Remember what we said in words / pictures previously about the derivative of a function at a point: the derivative at a point defines a line that is always tangent to a function, encodes its steepness at that point, and generally matches the underlying function near the point locally. In other words: the derivative at a point is the slope of the tangent line there.

The derivative at a point is the slope of the tangent line at that point.

How can we more formally describe such a tangent line and derivative?

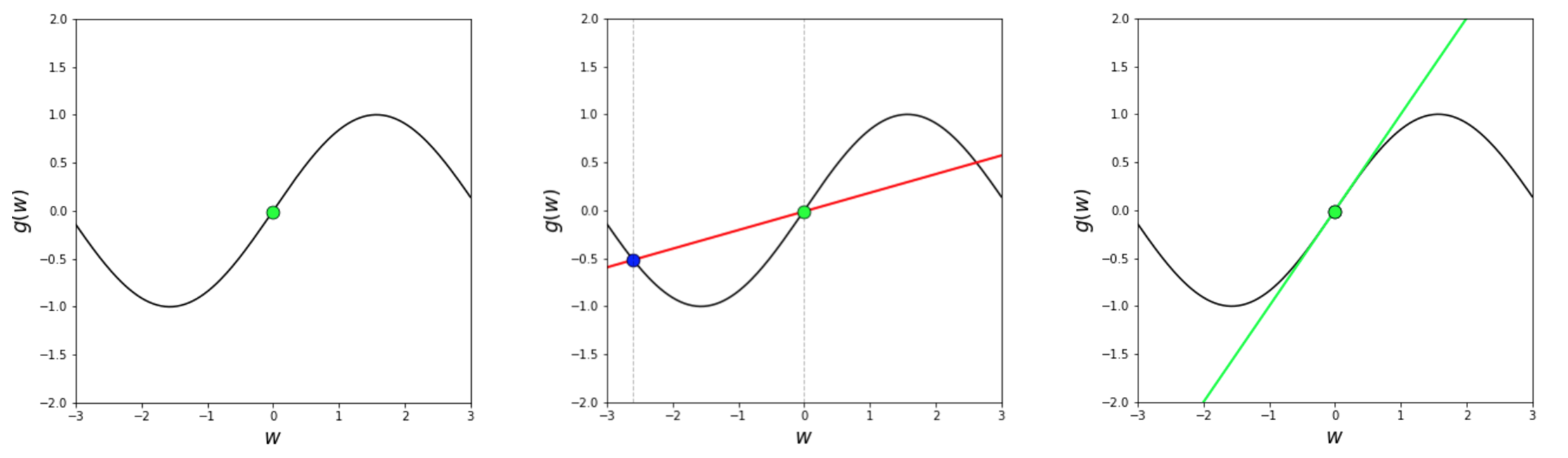

In the image below we show a picture of the sinusoid in the left panel, where we have plugged the input point $w^0 = 0$ into the sinusoid and highlighted the corresponding point $(0, \text{sin}(0))$ in green . In the middle panel we plot another point on the curve - with input $w^1 = -2.6$ the point $(-2.6, \text{sin}(-2.6) ) $ in blue , and the *secant line* in red formed by connecting $(-2.6, \text{sin}(-2.6) ) $ and $(0, \text{sin}(0))$ . Finally in the right panel we show the tangent line at $w = 0$ in lime green. The gray vertical dashed lines in the middle panel are there for visualization purposes only.

A secant line is just a line formed by taking any two points on a function - like our sinusoid - and connecting them with a straight line. On the other hand, while a tangent line can cross through several points of a function it is explicitly defined using only a single point. So in short - a secant line is defined by two points, a tangent line by just one.

The equation of any secant line is easy to derive - since all we need is the slope and any point on the line to define it - and the slope of a line can be found using any two points on it (like the two points we used to define the secant to begin with).

The slope - the line's 'steepness' or 'rise over run' - is the ratio of change in output $g(w)$ over the change in input $w$. If we used two generic inputs $w^0$ and $w^1$ - above we chose $w^0 = 0$ and $w^1 = -2.6$ - we can write out the slope of a secant line generally as

\begin{equation} \text{slope of a secant line} = \frac{g(w^1) - g(w^0)}{w^1 - w^0} \end{equation}Now using the point-slope form of a line we can directly write out the equation of a secant using the slope above and either of the two points we used to define the secant to begin with - using $(w^0, g(w^0))$ we then have the equation of a secant line $h(w)$ is

\begin{equation} h(w) = g(w^0) + \frac{g(w^1) - g(w^0)}{w^1 - w^0}(w - w^0) \end{equation}If we think about our green point at $w^0 = 0$ as fixed, then the tangent line at this point can be thought of as the line we get when we shift the blue point very close - infinitely close actually - to the green one.

Taking $w^0 = 0$ and $w^1 = -2.6$ the equation of the secant line connecting $(w^0,\text{sin}(w^0))$ and $(w^1,\text{sin}(w^1))$ on the sinusoid is given as

\begin{equation} h(w) = \text{sin}(0) + \frac{\text{sin}(-2.6) - \text{sin}(0)}{-2.6 - 0}(w - 0) \end{equation}Since $\text{sin}(0) = 0$ and $\text{sin}(-2.6) \approx -0.5155$ we can write this as

\begin{equation} h(w) = \frac{0.5155}{2.6}w \end{equation}The next Python cell activates a slider-based animation widget that illustrates precisely this idea. As you shift the slider from left to right the blue point - along with the red secant line that passes through it and the green point - moves closer and closer to our fixed point. Finally - when the two points lie right on top of each other - the secant line becomes the green tangent line at our fixed point.

# what function should we play with? Defined in the next line, along with our fixed point where we show tangency.

g = lambda w: np.sin(w)

# create an instance of the visualizer with this function

st = calclib.secant_to_tangent.visualizer(g = g)

# run the visualizer for our chosen input function and initial point

st.draw_it(w_init = 0, num_frames = 200)

In sliding back and forth, notice how it does not matter if we start from the left of our fixed point and move right towards it, or start to the right of the fixed point and move left towards it: either way the secant line gradually becomes tangent to the curve at $w^0 = 0$. There is no big 'jump' in the slope of the line if we wiggle the slider ever so slightly to the left or right of the fixed point - the slopes of the nearby secant lines are very very similar to that of the tangent.

When we can do this - come at a fixed point from either the left or the right and the secant line becomes tangent smoothly from either direction with no jump in the value of the slope - we say that a function has a derivative at this point, or likewise say that it is differentiable at the point.

If the slope of the secant line varies gradually - with no visible jumps - from both the left and right of a fixed point on a function, we say that a function has a derivative at this point, or likewise say that it is differentiable at the point. A function that has a derivative at every point is called differentiable.

Many functions like our sinusoid, other trigonometric functions, and polynomials are differentiable at every point - or just differentiable for short. You can tinker around with the previous Python cell - pick another fixed point! - and see this for yourself. You can also tinker around with the function - for example in the next cell we show - using the same slider mechanism - that the function

\begin{equation} g(w) = \text{tanh}(w)^2 \end{equation}has a derivative at the point $w^0$ = 1.

# what function should we play with? Defined in the next line, along with our fixed point where we show tangency.

g = lambda w: np.tanh(w)**2

# create an instance of the visualizer with this function

st = calclib.secant_to_tangent.visualizer(g = g)

# run the visualizer for our chosen input function and initial point

st.draw_it(w_init = 1, num_frames = 300)