In this Section we describe our first local optimization algorithms - random local search (sometimes called simulated annealing). With this instance of the general local optimization framework discussed in the previous Section we seek out a descent direction at each step by examining a number of random directions stemming from our current point. This manner of determining a descent direction, much like the global optimization scheme described in Section 5.2, scales terribly with the dimension of input and ultimately disqualifying random search for use with many modern machine learning / deep learning applications. However this zero-order approach to local optimization is extremely useful as a simple example of the general framework introduced previously, allowing us to give simple yet concrete algorithmic example of universally present ideas like descent directions, various choices for the steplength parameter, and issues of convergence.

The defining characteristic of the random local search (or just random search) - as is the case with every local optimization method - is how the descent direction $\mathbf{d}^{k-1}$ at the $k^{th}$ local optimization update step

\begin{equation} \mathbf{w}^{k} = \mathbf{w}^{k-1} + \mathbf{d}^{k-1}. \end{equation}is determined. With random search we do (perhaps) the laziest possible thing one could think to do in order to find a descent direction: we sample a given number of random directions stemming from $\mathbf{w}^{k-1}$, evaluate each candidate update point, and choose the one that gives us the smallest evaluation (so long as it is lower on the function than our current point). In other words, we look locally around the current point in a fixed number of random directions for a point that has a lower evaluation, and if we find one we move to it.

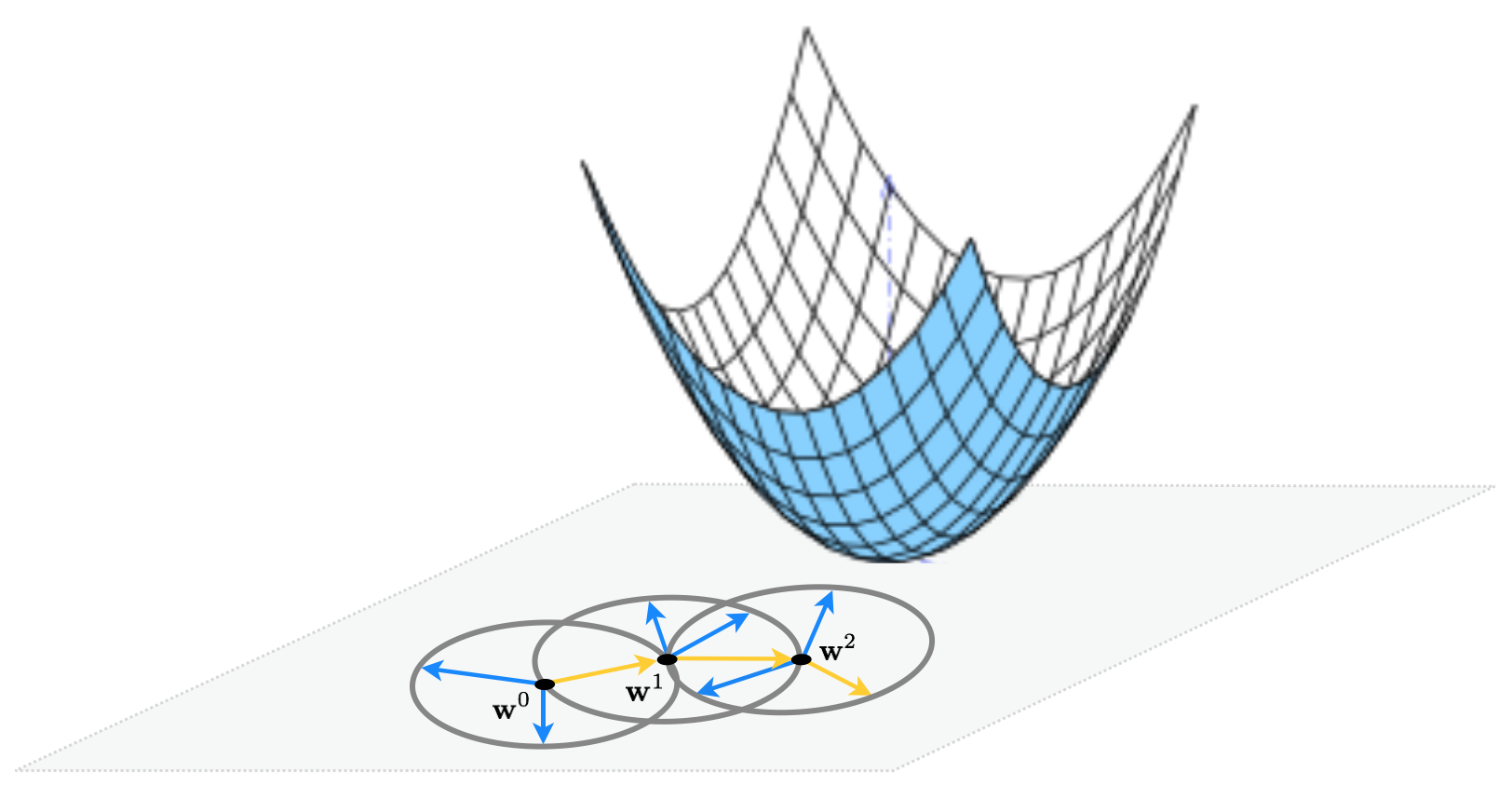

This idea illustrated figuratively in the picture below, where the function being minimized is the simple quadratic $g(w_1,w_2) = w_1^2 + w_2^2 + 2$, written more compactly as $g(\mathbf{w}) = \mathbf{w}^T \mathbf{w}^{\,} + 2$. Here for visualization purposes we set the number of random directions sampled $P = 3$. At each step only one of the three candidates produces a descent direction - drawn as a yellow arrow - while the other two are ascent directions drawn in blue.

To be more precise, at the $k^{th}$ step of this local method pick a number $P$ of random directions to try out. Generating the $p^{th}$ random direction $\mathbf{d}^p$ stemming from the previous step $\mathbf{w}^{k-1}$ we have a candidate point to evaluate

\begin{equation} \mathbf{w}_{\text{candidate}} = \mathbf{w}^{k-1} + \mathbf{d}^{p} \end{equation}After evaluating all $P$ candidate points we pick the one that gives us the smallest evaluation i.e., the one with the index given by the smallest evaluation

\begin{equation} s = \underset{p=1...P}{\text{argmin}}\,\,g\left(\mathbf{w}^{k-1} + \mathbf{d}^p\right) \end{equation}Finally, if best point found has a smaller evaluation than the current point i.e., if $g\left(\mathbf{w}^{k-1} + \mathbf{d}^s\right) < g\left(\mathbf{w}^{k-1}\right)$ then we move to the new point $\mathbf{w}^k = \mathbf{w}^{k-1} + \mathbf{d}^s$, otherwise we examine another batch of $P$ random directions and try again.

If we are to choose a set of directions at each step randomly, how shall we choose them? One idea could be to use choose some distribution - e.g., a Gaussian - and (at each step) use samples from this distribution as our candidate directions. The only issue with doing this is one of consistency: each of the candidate directions - if constructed in this way - would have different lengths. Since we have no apriori reason for doing this at each step, to keep our random candidate directions consistent we can normalize them to have the same length e.g., length one. Indeed this is how we illustrated the algorithm figuratively in the illustration above. If we use directions of unit-length in our algorithm - i.e., where $\Vert \mathbf{d} \Vert_2 = 1$ always - this means that at each step of the algorithm we move a distance of length one since

\begin{equation} \Vert \mathbf{w}^k - \mathbf{w}^{k-1} \Vert_2 = \Vert \left(\mathbf{w}^{k-1} + \mathbf{d}\right) - \mathbf{w}^{k-1} \Vert_2 = \Vert \mathbf{d} \Vert_2 = 1. \end{equation}From here we can adjust each step to have whatever length we desire by introducing a steplength parameter $\alpha$ into each step to completely control how far we travel with each step (as discussed in the previous Section). This more general step looks like the following

\begin{equation} \mathbf{w}^k = \mathbf{w}^{k-1} + \alpha\mathbf{d}^{\,} \end{equation}The length of this step - using a unit-length directions - is now exactly equal to the steplength $\alpha$, as

\begin{equation} \Vert \mathbf{w}^k - \mathbf{w}^{k-1} \Vert_2 = \Vert \left(\mathbf{w}^{k-1} + \alpha\mathbf{d} \right) - \mathbf{w}^{k-1} \Vert_2 = \Vert \alpha \mathbf{d} \Vert_2 = \alpha \Vert \mathbf{d} \Vert_2 = \alpha \end{equation}Now at the $k^{th}$ step we try out $P$ unit-length random directions - but scaled by the steplength parameter so that the distance we travel is actually $\alpha$ - taking the one that provides the greatest decrease in function value.

Below we summarize the random local search algorithm (using the steplength parameter) in a formal pseudo-code. Notice that we have chosen the output of the algorithm to consist of the entire history of both the weights and corresponding cost function value for each step of the process. This is done so we can empirically study the convergence of the algorithm afterwards for particular settings of the algorithm parameters.

1: input: initial point $\mathbf{w}^0$, maximum number of steps $K$, number of random samples per step $P$, a steplength $\alpha$ or diminishing steplength rule

2: for $\,\,k = 1...K$

3: compute $P$ unit length random directions $\left\{ \mathbf{d}^{p}\right\} _{p=1}^{P}$ (by e.g., sampling and normalizing an $N$ dimensional Gaussian)

4: find $s = \underset{p=1...P}{\text{argmin}}\,\,g\left(\mathbf{w}^{k-1} + \alpha \mathbf{d}^p\right)$

5: set $\mathbf{d}^{k} = \mathbf{d}^{s}$

6: form new point $\mathbf{w}^k = \mathbf{w}^{k-1} + \alpha\mathbf{d}^k$

7: if $g\left(\mathbf{w}^k\right) < g\left(\mathbf{w}^{k-1}\right)$

8: $\mathbf{w}^{k-1} \longleftarrow \mathbf{w}^{k}$

9: output: history of weights $\left\{\mathbf{w}^{k}\right\}_{k=0}^K$ and corresponding function evaluations $\left\{g\left(\mathbf{w}^{k}\right)\right\}_{k=0}^K$

Below we have a Python implementation of the random local search algorithm.

# random search function

def random_search(g,alpha_choice,max_its,w,num_samples):

# run random search

weight_history = [] # container for weight history

cost_history = [] # container for corresponding cost function history

alpha = 0

for k in range(1,max_its+1):

# check if diminishing steplength rule used

if alpha_choice == 'diminishing':

alpha = 1/float(k)

else:

alpha = alpha_choice

# record weights and cost evaluation

weight_history.append(w)

cost_history.append(g(w))

# construct set of random unit directions

directions = np.random.randn(num_samples,np.size(w))

norms = np.sqrt(np.sum(directions*directions,axis = 1))[:,np.newaxis]

directions = directions/norms

### pick best descent direction

# compute all new candidate points

w_candidates = w + alpha*directions

# evaluate all candidates

evals = np.array([g(w_val) for w_val in w_candidates])

# if we find a real descent direction take the step in its direction

ind = np.argmin(evals)

if g(w_candidates[ind]) < g(w):

# pluck out best descent direction

d = directions[ind,:]

# take step

w = w + alpha*d

# record weights and cost evaluation

weight_history.append(w)

cost_history.append(g(w))

return weight_history,cost_history

Notice that the history of function evaluations returned is called cost_history. This is because - in the context of machine learning / deep learning - mathematical functions are often referred to as cost or loss functions.

Below we illustrate the use of this algorithm with a variety of examples.

In the next Python cell we run random local search for 4 steps with $\alpha = 1$ for all steps, at each step searching for $P = 1000$ random directions to minimize the simple quadratic

\begin{equation} g(w_0,w_1) = w_0^2 + w_1^2 + 2 \end{equation}The output of the cell shows the function in three-dimensions on the left, along with the set of steps produced by the algorithm colored from green - at the start of the run where we initialize at $\mathbf{w}^0 = \begin{bmatrix}3 \\ 4\end{bmatrix}$ - to red when the algorithm halts. Directed arrows illustrate each descent direction chosen, connecting each step to its predecessor, and are shown in the input space to help illustrate the total path the algorithm takes. In the right panel is the same picture, only viewed from directly above. Here contours of the function are drawn, with smaller values on the function corresponding to contours of decreasing radius and colored lighter blue.

# define function

g = lambda w: np.dot(w.T,w) + 2

# run random search algorithm

alpha_choice = 1; w = np.array([3,4]); num_samples = 1000; max_its = 5;

weight_history,cost_history = random_search(g,alpha_choice,max_its,w,num_samples)

# show run in both three-dimensions and just the input space via the contour plot

static_plotter.two_input_surface_contour_plot(g,weight_history,view = [10,30],xmin = -4.5, xmax = 4.5, ymin = -4.5, ymax = 4.5)

Notice that if the dimension of the input $N$ is greater than $2$ we cannot make a plot like the ones above to tell how well a particular run of random search performed - or any local optimization method for that matter. A more general way to view the steps from the particular run of any local method regardless of input dimension - which of course we do in order to examine the quality of its solution, and for the proper setting of the steplength parameter $\alpha$ as well as for general debugging purposes - is to plot the corresponding sequence of function evaluations. That is if the returned history of weights from a local method run is $\left\{\mathbf{w}^{k}\right\}_{k=0}^K$ we plot corresponding function evaluations $\left\{g\left(\mathbf{w}^{k}\right)\right\}_{k=0}^K$ as pairs $\left(k\,, g\left(\mathbf{w}^k\right)\right)$ as we demonstrate below. This allows us to tell - regardless of the input dimension $N$ - how the algorithm performed. This is why our random search algorithm returns the variable cost_history, which contains this sequence of function evaluations.

This visualization is called a cost function history plot - since mathematical functions are often referred to as cost functions in machine learning / deep learning - and are employed virtually whenever a local algorithm is used in practice. We produce a cost function history plot using the output of the previous example below. Notice an additional benefit of this visualization - we can more easily tell the exact value of each function evaluation during the previous run of random search.

# plot the cost function history for a given run

static_plotter.plot_cost_histories([cost_history],start = 0,points = True)

As another example, we minimize the function

\begin{equation} g(w_0,w_1) = \text{tanh}(4w_0 + 4w_1) + \text{max}(0.4w_0^2,1) + 1 \end{equation}using random local search again setting $P = 1000$ and 8 steps with $\alpha = 1$ for all steps. Here because an entire region of global minima exist at $g(w_1,w_2) = 1$ the method - as clumsy as it is given the settings - quickly finds a global minimum when initiated at $\mathbf{w}^0 = \begin{bmatrix} 2 \\ 2 \end{bmatrix}$.

# define function

g = lambda w: np.tanh(4*w[0] + 4*w[1]) + max(0.4*w[0]**2,1) + 1

# run random search algorithm

alpha_choice = 1; w = np.array([2,2]); num_samples = 1000; max_its = 8;

weight_history,cost_history = random_search(g,alpha_choice,max_its,w,num_samples)

# show run in both three-dimensions and just the input space via the contour plot

static_plotter.two_input_surface_contour_plot(g,weight_history,view = [20,300],num_contours = 3,xmin = -3,xmax = 3,ymin = -5,ymax = 3)

In this example we show what one may need to do in order to find the global minimum of a function using (normalized) random local search. For visualization purposes we use the single-input function

\begin{equation} g(w) = \text{sin}(3w) + 0.1w^2 \end{equation}we initialize two runs - at $w^0 = 4.5$ and $w^0 = -1.5$. For both runs we use a steplength of $\alpha = 0.1$ fixed for all 10 iterations. As can be seen by the result depending on where we initialize we may end up near a local or global minimum - here resulting from the first and second initialization respectively. Here we illustrate the steps of each run as circles along the input axis with corresponding evaluations on the function itself as a similarly colored 'x'. The steps of each run are colored green near the start of the run to red when a run halts.

# define function

g = lambda w: np.sin(3*w) + 0.1*w**2

# run random search algorithm

alpha_choice = 0.1; w = 4.5; num_samples = 10; max_its = 10;

weight_history_1,cost_history_1 = random_search(g,alpha_choice,max_its,w,num_samples)

alpha_choice = 0.1; w = -1.5; num_samples = 10; max_its = 10;

weight_history_2,cost_history_2 = random_search(g,alpha_choice,max_its,w,num_samples)

# make static plot showcasing each step of this run

static_plotter.single_input_plot(g,[weight_history_1,weight_history_2],[cost_history_1,cost_history_2],wmin = -5,wmax = 5)

In the examples of the previous Subsection we steplength parameter $\alpha$ set fixed for all steps of each run. This choice - to take one value for $\alpha$ and use if to each and every step of the algorithm - is called a fixed steplength rule. This is a very common choice of steplength rule for local optimization methods in general, but one can also imagine changing the value of $\alpha$ from step-to-step in a single run of a local algorithm. A rule that adjusts the value of $\alpha$ from step-to-step is often referred to as an adjustable steplength rule, of which there are many in use.

In this Section we explore a set of examples that further exemplifies the importance of the fixed steplength rule, as well as perhaps the most common adjustable steplength rule used when applying local optimization in machine learning / deep learning: the so-called diminishing steplength rule.

Here we re-run the random local search algorithm using the same simple quadratic and algorithm settings as described in Example 1 (where in particular we used a fixed steplength rule setting $\alpha = 1$ for all steps). However now we initialize at the point $\mathbf{w}^0 = \begin{bmatrix} 1.5 \\ 2 \end{bmatrix}$ which - as can see in the printout generated by the next Python cell - prevents the algorithm from reaching the function's global minimum. Note here we do not color the contour plot so that the radius of choices provided by the particular fixed steplength $\alpha = 1$ can be made visible on the contour plot itself.

Here the algorithm - while having reached a low point of the function has not reached the global minimum located at the origin $\begin{bmatrix}0 \\ 0 \end{bmatrix}$ (horizontal and vertical axes have been drawn in dashed black in the right panel to highlight this point). The algorithm stopped after taking 4 steps, at the point colored red. We visualize this in the right panel by drawing the contour of the quadratic on which the final point lies in dashed red, as well as the unit circle centered at the final point in blue from which directions are sampled in search of a descent direction there. Notice how every point on the blue unit circle - representing possible direction of length one stemming from the final step - lies on a contour of the quadratic that is greater than or equal to the one on which the final point lies. Hence very possible direction provides ascent, not descent, and the algorithm halts. In contrast to a descent direction such directions, which increase instead of decrease the function, are called ascent directions.

The problem here is that each direction we take must have length one since we have set $\alpha = 1$ for all steps - thus the length of each step must be length one as well. If we could take shorter steps - i.e., steps on a smaller radius than the blue circle shown in the right panel above - we could in fact descend onto lower contours of the quadratic, and get closer to the global minimum. This of course can be accomplished by using a smaller value for the steplength parameter $\alpha$.

# define function

g = lambda w: np.dot(w.T,w) + 2

# run random search algorithm

alpha_choice = 1; w = np.array([1.5,2]); num_samples = 1000; max_its = 30;

weight_history_1,cost_history_1 = random_search(g,alpha_choice,max_its,w,num_samples)

# animate 2d slope visualizer

view = [40,-50]

optlib.random_local_search.visualize3d(g,weight_history_1,cost_history_1,view = view,wmax=max(w[0],w[1]),plot_final = True,axes = True)

We can visualize how the final 25 steps of this sort of run fail to descend by plotting the corresponding cost function history, which we do below. This common visualization tool really helps us to see how - after the first few steps - random search with these parameter settings fails to descend.

# plot the cost function history for a given run

static_plotter.plot_cost_histories([cost_history_1],start = 0,points = True)

Setting the steplength parameter $\alpha$ smaller we can look again make another run mirroring the one performed above, with much better results. Below we make the same run as above except now we set $\alpha = 0.1$ for all steps. Running the algorithm now we can see that it converges to a point much closer to the global minimum of the function at $\mathbf{w} = \begin{bmatrix} 0 \\ 0 \end{bmatrix}$.

# define function

g = lambda w: np.dot(w.T,w) + 2

# run random search algorithm

alpha_choice = 0.1; w = np.array([1.5,2]); num_samples = 1000; max_its = 30;

weight_history_2,cost_history_2 = random_search(g,alpha_choice,max_its,w,num_samples)

# animate 2d slope visualizer

view = [40,-50]

optlib.random_local_search.visualize3d(g,weight_history_2,cost_history_2,view = view,wmax=max(w[0],w[1]),plot_final = False,axes = True)

Notice however that we need to be careful in choosing the steplength value with this simple quadratic, and by extension any general function. If - for example - we run the same experiment again but cut the steplength down to $\alpha = 0.01$ we do not reach a point anywher near the global minimum, as we show by performing the same run but setting $\alpha$ to this value in the next Python cell.

# define function

g = lambda w: np.dot(w.T,w) + 2

# run random search algorithm

alpha_choice = 0.01; w = np.array([1.5,2]); num_samples = 1000; max_its = 30;

weight_history_3,cost_history_3 = random_search(g,alpha_choice,max_its,w,num_samples)

# animate 2d slope visualizer

view = [40,-50]

optlib.random_local_search.visualize3d(g,weight_history_3,cost_history_3,view = view,wmax=max(w[0],w[1]),plot_final = False,axes = True)

Thus in general the combination of steplength and maximum number of iterations are best chosen together. The trade-off here is simple: a small stepsize combined with a large number of steps can guarantee convergence to towards a local minimum, but can be very computationally expensive. Conversely a large steplength and small number of maximum iterations can - as we saw in Example 6 - be cheaper but less effective at finding small evaluation points.

# plot the cost function history for a given run

static_plotter.plot_cost_histories([cost_history_2,cost_history_3],start = 0,points = True,labels = [r'$\alpha = 0.1$',r'$\alpha = 0.01$'])

Another choice we have in choosing steplengths is to change its value at each step. For more advanced local search algorithms there are a host of ways of doing this, but with a simple method we discuss here there is really only one appropriate general scheme: to diminish the size of the steplength at each step. This is a safe choice of steplength because it ensures that the algorithm can get into any 'small nooks and crannies' where a function's minima may lie. This is often referred to as a diminishing steplength rule.

A common way of producing a diminishing steplength is tto set $\alpha = \frac{1}{k}$ at the $k^{th}$ step of the process. This gives us the benefit of shrinking the distance between subsequent steps as we progress on a run since with this choice of steplength and a unit-length descent direction we have that

\begin{equation} \Vert \mathbf{w}^k - \mathbf{w}^{k-1} \Vert_2 = \Vert \left(\mathbf{w}^{k-1} + \alpha\mathbf{d}\right) - \mathbf{w}^{k-1} \Vert_2 = \Vert \alpha \mathbf{d} \Vert_2 = \alpha \Vert \mathbf{d} \Vert_2 = \alpha = \frac{1}{k}. \end{equation}However at the same time we can see that if we sum up the total distance the algorithm travels in $K$ steps (provided we indeed move at each step) we can then consequentially compute

\begin{equation} \sum_{k=1}^{K} \Vert \mathbf{w}^k - \mathbf{w}^{k-1} \Vert_2 = \sum_{k=1}^{K}\frac{1}{k} \end{equation}The beauty of this choice of stepsize is that

The latter condition follows from the fact that $\sum_{k=1}^{\infty}\frac{1}{k} = \infty$ is the class harmonic series).

So in theory this means that an algorithm employing this sort of diminishing steplength rule can move around an infinite distance in search of a minimum all the while taking smaller and smaller steps, which allows it to work into any 'small nooks and crannies' a function might have where any minimum lie.

In the cell below we make two runs of random search using a famous optimization test function called the Rosenbrock function which takes the form

\begin{equation} g\left(w_0,w_1\right) = 100\left(w_0 - w_1^2\right)^2 + \left(w_0 - 1\right)^2. \end{equation}This function (whose contour plot is shown in the left panel below) has a global minimum at the point $\mathbf{w}^{\star} = \begin{bmatrix} 1 \\ 1 \end{bmatrix}$ located in a very narrow and curved valley.

With both runs we begin at the point $\mathbf{w} = \begin{bmatrix} -2 \\ -2 \end{bmatrix}$, examine 1000 sample directions per step, and take a maximum of 50 steps. First - as illustrated below - we use a fixed steplength value $\alpha = 1$. We can see that the procedure halts after just 5 steps because every direction stemming from the final point of length one is an ascent direction. The method was able to enter the long curved narrow valley where the mininum is located, but cannot properly navigate inside it because the steplength value is too large.

# define function

g = lambda w: 100*(w[1] - w[0]**2)**2 + (w[0] - 1)**2

# run random search algorithm

alpha_choice = 1; w = np.array([-2,-2]); num_samples = 1000; max_its = 50;

weight_history_1,cost_history_1 = random_search(g,alpha_choice,max_its,w,num_samples)

# show run in both three-dimensions and just the input space via the contour plot

static_plotter.two_input_contour_plot(g,weight_history_1,num_contours = 9,xmin = -2.5,xmax = 2.5,ymin = -2.25,ymax = 2)

Now we make the same run but use the diminishing steplength rule $\alpha = \frac{1}{k}$. Because the steplength is constantly shortened the procedure never encounters a problem like the run above, and completes all 50 iterations. More importantly the diminishing steplength allows this local method to better navigate the long narrow valley that contains the global mininum - and so can get substantially closer to it than can a run with a fixed steplength value. Below make this run, plotting the original fixed steplength run in the left panel and the run produced with the diminishing steplength rule in the right panel.

# define function

g = lambda w: 100*(w[1] - w[0]**2)**2 + (w[0] - 1)**2

# run random search algorithm

alpha_choice = 'diminishing'; w = np.array([-2,-2]); num_samples = 1000; max_its = 50;

weight_history_2,cost_history_2 = random_search(g,alpha_choice,max_its,w,num_samples)

# show run in both three-dimensions and just the input space via the contour plot

static_plotter.compare_runs_contour_plots(g,[weight_history_1,weight_history_2],num_contours = 10,xmin = -2.5,xmax = 2.5,ymin = -2.25,ymax = 2,show_original = False)

As with the global optimization approach discussed in the previous Section, the curse of dimensionality also poses a major obstacle to random local search as the dimension of a function's input increases. We illustrate this using a sequence of simple quadratic functions (where we will gradually increase the input dimension $N$)

\begin{equation} g\left(\mathbf{w}\right)=\mathbf{w}^{T}\mathbf{w}+2 \end{equation}starting at the point

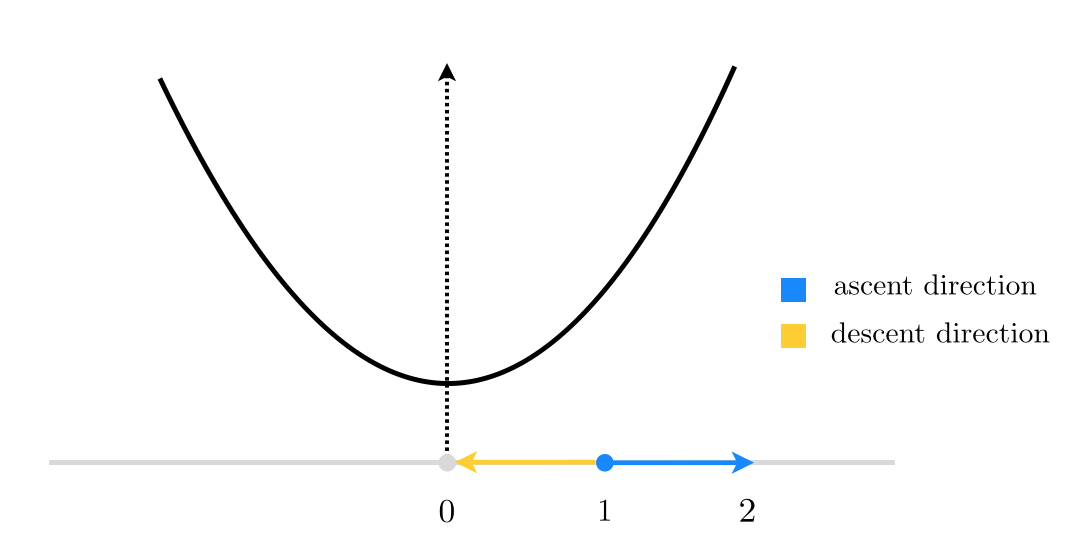

\begin{equation} \mathbf{w}^{0}=\left[\begin{array}{c} 1\\ 0\\ 0\\ \vdots\\ 0 \end{array}\right]_{ N\times1} \end{equation}When $N=1$, this reduces to finding a descent direction at random for the function $g(w)=w^2$ starting at $w^0=1$, as shown in the figure below.

Here, starting at $w^0=1$, there are only 2 unit directions we can move in: (i) the negative direction toward the origin shown in yellow, which is a descent direction (as it takes us to the minimum of our quadratic function), or (ii) away from the origin shown in blue, which is indeed an ascent direction (as the function evaluation increases at its endpoint). So in this case, if we decide to choose our direction randomly we will have a $\frac{1}{2}=50\%$ descent probability. Not too bad!

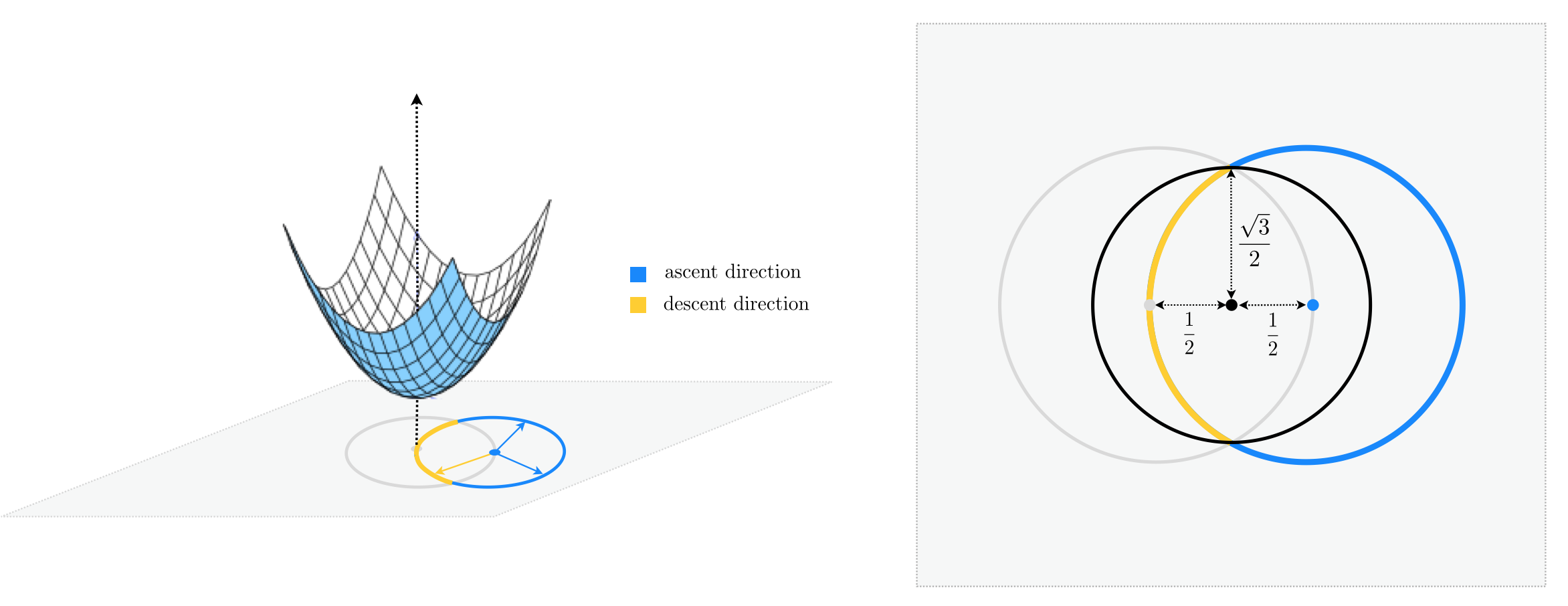

Let's see what happens when $N=2$. As you can see in the figure below, starting at $\mathbf{w}^{0}=\left[\begin{array}{cc} 1 \\ 0\end{array}\right]$ (shown by a blue circle) we now have infinitely many unit directions to choose from, where only a fraction of them whose endpoint lie inside the unit circle (centered at origin) are descent directions. Therefore if we were to choose a unit direction randomly, the descent probability would be calculated as the length of the yellow arc in the figure divided by the entire length of the unit circle centered at $\mathbf{w}^{0}$.

\begin{equation} \text{descent probability}=\frac{\text{length of yellow arc}}{\text{length of unit circle}} \end{equation}For more clarity, the two-dimensional input space is re-drawn from above in the right panel of the figure below.

Notice the black circle shown in the right panel, centered at the midpoint of $\mathbf{w}^{0}$ and the origin, completely encompasses the yellow arc, and hence one-half of its length is greater than that of the yellow arc. In other words, the length of the yellow arc is upper-bounded by the length of the black semi-circle that lie inside the unit circle, and we have

\begin{equation} \text{descent probability}Both the numerator and the denominator are now easy to compute, noticing that a simple application of the Pythagorean theorem gives the radius of the black circle as $\frac{\sqrt{3}}{2}$. \begin{equation} \text{descent probability}Therefore in two dimensions, the chance of randomly selecting a descent direction is at most 43%, down 7% from its value in one dimension.This rather slight decrease may not seem like a deal breaker at first, but as we travel into larger and larger dimensions we can easily determine that the descent probability shrinks exponentially in $N$.

This is because in higher dimensions we can still use the same geometric argument we made above to find an upperbound to the descent probability, only this time we are dealing with hyperspheres instead of circles. More specifically, in $N$ we can write

\begin{equation} \text{descent probability}So, for instance, when $N=30$ the descent probability falls below 1%.Here we empirically confirm the curse of dimensionality problem described above for the simple quadratic used there. In the Python cell below we gradually increase the dimension of the input to this quadratic from $N = 1$ to $N = 25$, and starting at the $N$ dimensional input point $\mathbf{w}^{0}=\left[\begin{array}{cc} 1 \\ 0 \\ \vdots \\ 0\end{array}\right]$ we create $10,000$ random unit directions and evaluate candidate point $\mathbf{w}_{\text{candidate}} = \mathbf{w}^0 - \mathbf{d}$ - where $\mathbf{d}$ is a random unit direction - via the quadratic.

The printout shows what portion of the sampled directions provide a decrease in function evaluation - or in other words what portion of those sampled are descent directions - among the first $100$, $1,000$, and $10,000$ directions sampled. As we can see from the printout this portion vanishes rapidly to zero as $N$ increases, even when $10,000$ random directions are chosen.

# run experiment

optlib.random_method_experiments.random_local_experiment()

The true global minimum here is located at $(w_1,w_2) = (0,0)$, which we do not get that close too in the above run. However if we increase both the number of directions sampled at each step $P$ as well as the number of total iterations we can get closer. We do this in the next Python cell - increasing $P = 100$ and letting the maximum number of iterations equal 10.

As we have seen random local search - our first algorithm worthy of coding up - is crippled by the curse of dimensionality, being highly inefficient for even functions with just 30 dimensional input. Because many modern machine learning cost functions have input dimension $N$ on the order of thousands to hundreds of millions random search itself is not practically useful in the context of most machine learning / deep learning.

By far the biggest problem with random local search lies in the highly inefficient way in which descent directions - that is directions that lead us downward in a function - are found. If we had a better (i.e., computationally cheaper) way of finding descent directions then the local method paradigm will work extremely well. This is precisely what we do in the next Section and in particular the next two Chapters, which are dedicated solely to the pursuit of finding good and computationally cheap descent directions for the local paradigm. There we will show calculus can be leveraged to determine cheap and effective descent directions to power the local method scheme, leading to powerful algorithms called gradient descent and Newton's method.

At the first step of the random local search algorithm we move from our initialization $\mathbf{w}^0$ to a new point $\mathbf{w}^1$, in a unit-length descent direction $\mathbf{d}$ from the initial point. We decide on this direction by sampling random unit-length directions stemming from $\mathbf{w}^0$. This gives $P$ random points on the unit hypersphere centered at $\mathbf{w}^0$, i.e., the set of points $\mathbf{w}^0 + \mathbf{d}$ where $\Vert \mathbf{d} \Vert_2 = 1$. In other words, the first step of our random local search algorithm can be thought of as an imperfect computational approach to finding the smallest value of $g\left(\mathbf{w}^0 + \mathbf{d}^{\,}\right)$ - that is, the very best unit length descent direction - over all unit length directions $\mathbf{d}$. This problem can be stated formally as the constrained optimization problem

which is precisely like the optimizations we have looked at so far, only now we are not free to search over every possible $\mathbf{d}$ - only those allowed by the constraint (here those with unit length). Like our original problem this cannot be solve 'by hand' in general. If we could we would derive the optimal decent step for $g$ at $\mathbf{w}^0$ and there would be no need to perform the random sampling, which again is just our attempt to approximately solve this problem.

Likewise at the $k^{th}$ step of the algorithm, we perform the same sort of random search beginning at the point $\mathbf{w}^{k-1}$ - we are searching for the minimum value of $g$ over the unit hypersphere centered here (the set of points $\mathbf{w}^{k-1} + \mathbf{d}^{\,}$ where $\Vert \mathbf{d} \Vert_2 = 1$). In other words, we can think of this as an approximation to the solution of the constrained problem

As with the first step, if we could solve the above problem 'by hand' we would not need to randomly sample directions in search of the one providing greatest descent, we could simply solve the problem to recover the best direction for $g$ at $\mathbf{w}^{k-1}$ directly.

Finally notice how we could turn our perspective around with regards to developing the random local search algorithm. That is we could have started with the formal description of the $k^{th}$ step above. Having determined that the best descent direction could not be determined by solving the above 'by hand' in general, we could have then stumbled onto the $k^{th}$ step of the random local search algorithm as a way of approximately solving it.